AI-powered models have the capability to monitor greenhouse gases and forecast extreme weather events, as demonstrated by the joint effort of IBM and NASA.

However, a pressing concern remains: what is the environmental impact of sustaining these energy-intensive models? The environmental footprint of data centers depends on factors like electricity and water consumption, as well as the longevity of the equipment.

In a report by Climatiq, it was revealed that cloud computing contributes 2.5% to 3.7% of global greenhouse gas emissions, surpassing the emissions of commercial flights at 2.4%. These figures, though a couple of years old, are likely to have increased with the continuous advancement of artificial intelligence.

AI models are voracious consumers of energy, and the Lincoln Laboratory Supercomputing Center (LLSC) at the Massachusetts Institute of Technology witnessed a surge in the number of AI programs running in their data centers. As energy consumption escalated, students – computer scientists at MIT began to explore more efficient ways of running these AI workloads.

“Energy-aware computing is not a widely explored research area, as many have held onto their data,” explains Vijay Gadepally, a senior staff member at LLSC leading energy-aware research efforts. “Someone has to initiate this effort, and we hope that others will follow suit.”

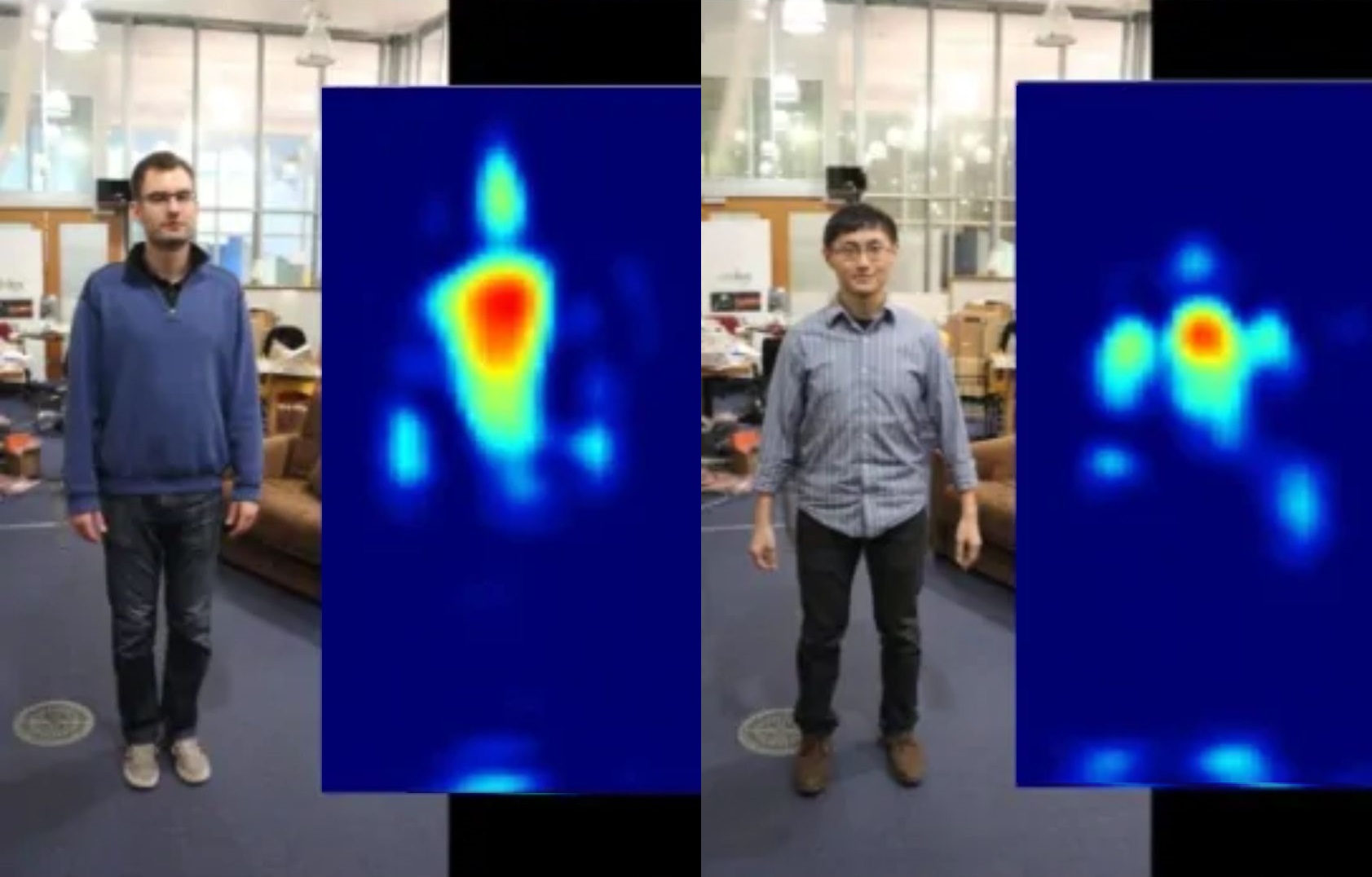

The team implemented power caps on graphics processing units (GPUs), which are the chips responsible for powering energy-hungry AI models.

For instance, NVIDIA GPUs used in training OpenAI’s GPT-3 large language model consumed an astonishing 1,300 megawatt-hours of electricity, equivalent to a month’s consumption by a typical U.S. household. It’s estimated that OpenAI employed around 10,000 GPUs for GPT’s training.

By imposing these power caps, the researchers managed to reduce the energy consumption of AI models by 12-15%. However, there was a trade-off in the form of longer training times. In one experiment, they trained Google’s BERT language model, limiting its GPU power to 150 watts, which extended the training time from 80 to 82 hours.

Efforts to cool down energy-hungry AI systems were also made. The team developed software enabling data center operators to set limits either across their entire system or on a job-specific basis.

The outcome of this innovation was evident at LLSC, where the GPUs in their supercomputers now run 30 degrees Fahrenheit cooler. This not only alleviates the strain on the cooling system but also enhances the reliability and lifespan of the hardware.

A report by the International Energy Agency (IEA) emphasized that the next decade will witness a rapid growth in the demand for digital technologies and services. To mitigate emissions growth, it’s imperative to make strides in energy efficiency, invest in zero-carbon electricity, and decarbonize supply chains.

The report also underscored the necessity of robust climate policies to ensure that digital technologies are applied to reduce emissions, rather than exacerbate them.

Additionally, the MIT team discovered another method to curtail energy consumption. The process of training an AI model necessitates a significant amount of data and often involves testing thousands of configurations to determine the most suitable parameters.

This energy-intensive trial-and-error process was mitigated by developing a model that predicts the probable performance of different configurations. Models showing poor performance were identified early and discontinued, leading to an astonishing 80% reduction in energy consumption during model training.